Making Competencies Work

Most competency frameworks fail because they’re one-size-fits-none, mix traits with behaviours, and aren’t validated. This practical guide on making competencies work shows how to design for purpose, map by job family, write measurable indicators, choose fair scales, validate with Subject Matter Experts, and link assessment to learning and career pathways. It also shows where AI-assisted modelling helps—without creating duplicates or bias.

Why many frameworks miss the mark

- Generic libraries feel vague; staff can’t see themselves in the wording.

- Mixed constructs: traits/abilities get blended with behaviours, making ratings subjective.

- Overlapping levels and broad statements → inconsistent assessment.

- Weak validation: little evidence the model predicts performance; correlation ≠ causation.

- One set for everything: the same items used for values, leadership, and technical work.

But ……. does one size really fit all?

Decide purpose first (you will likely need more than one framework)

To avoid these validity and credibility issues ensure clarity around the purpose of your competency framework. Determine how you are going to use competencies?

- To aid in recruitment and selection?

- To identify job related knowledge and skill gaps

- For competency based learning and development?

- To develop staff along career pathways?

- To identify and develop talent for your succession pipeline?

- To shape behavior and performance?

It is highly likely you will need more than one competency set/framework.

Competency Types

Capabilities and competencies fall into 3 buckets –

- Performance related (values/citizenship): values -based behaviours used in reviews.

- Role competencies (core/leadership/technical): used for readiness, mobility, and risk mitigation.

- Capabilities – Selection/credentialing: track certifications/licenses in role requirements; keep separate from behaviours and competencies.

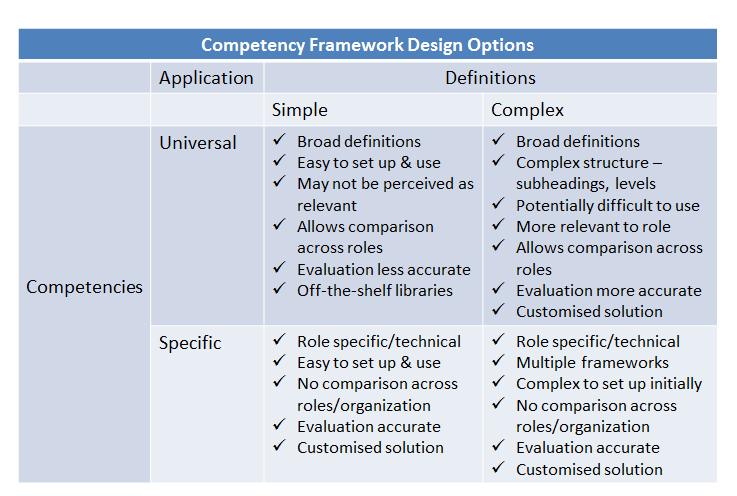

Framework Options (pick per use case)

Competency Frameworks vary on two dimensions – Specific versus Universal in application and Simple versus Complex in definitions.

Choose the appropriate design depending on the purpose of your Competency Framework. You may have a mixture in your Competency Management system.

Simple definition + Universal Application is suitable for Performance Management of small sets of Values based behaviors and Core Competencies.

Complex definition+ Universal Application can be used for multi level Leadership Competencies.

Simple definition + Specific Application for core functional competencies – i.e. ‘sales competencies’

Complex definition + Specific Application for job specific competencies with sub-headings and multiple proficiency levels, high-risk domains (clinical, engineering).

Your platform should support parallel approaches in one system.

Five steps to make competencies work

1) Map by job family (re-use before you write)

Create a set of competencies for a job family. Make small additions for role specific requirements. Use filters and search to check before adding items to avoid duplication.

2) Define measurable competency indicators

Use the formula Verb + context + standard.

Keep one behavior per indicator. If using proficiency levels, step up by task scope, independence, risk, complexity.

3) Choose scales and methods that fit assessment method

- Agreement or frequency scales for values based behaviours and leadership competencies

- Yes/No for strict standards

- Proficiency level scales if roles have a proficiency level requirement

- Competency level description scales where proficiency levels are not used

- 7-point scales give a better picture of growth over time, ratings cluster to center of shorter scales

- Provide expanded explanation for each rating point

4) Assessment design and validation

- Provide clear instructions

- Hide prior scores to reduce bias – anchor effects.

- Use 360s as developmental (anonymous), not in performance management that affects pay and advancement decisions

Have subject matter experts involved. Pilot on a small cohort, update from feedback

5) Link to action

Feed assessment results into learning plans, career pathways, and analytics (skills gap analysis, proficiency distribution) Set valid period yo generate re-assessments according to risk, compliance and regulatory requirements.

When to use AI (safely)

Use AI-assisted modelling to generate role-relevant suggestions and save the drafts to your competency library and role profiles for review. Keep human-in-the-loop, run duplicate checks, and never publish without Subject Matter Expert approval.

Common mistakes to avoid

Competency definition is too broad – competency items must be unambiguous and observable

- Don’t use personality traits as competency indicators – personality is a tendency to behave in a pattern – not unambiguously observable

- Use of adjectives like ‘effective’ – specify what effective means

Competency indicators cover multiple behaviours or aspects so unclear what the assessment related to – have only one behavior/aspect per indicator

Mixing values with core competencies – keep values based behaviours in performance management, core competencies in competency development.

Overlapping rating scale anchors – “usually meets expectations”, “often meets expectations” “regularly meets expectations” – provide an expanded explanation

Lack of validation – get buy in and validation from a cohort of staff and managers

Lack of framework review – your operations and environment are constantly evolving and changing – your competency frameworks must too – review at least annually and record the review.