Misinformation - Disinformation what you need to know in 2025

Key points from recent research and institutional publications listed in the Reference section.

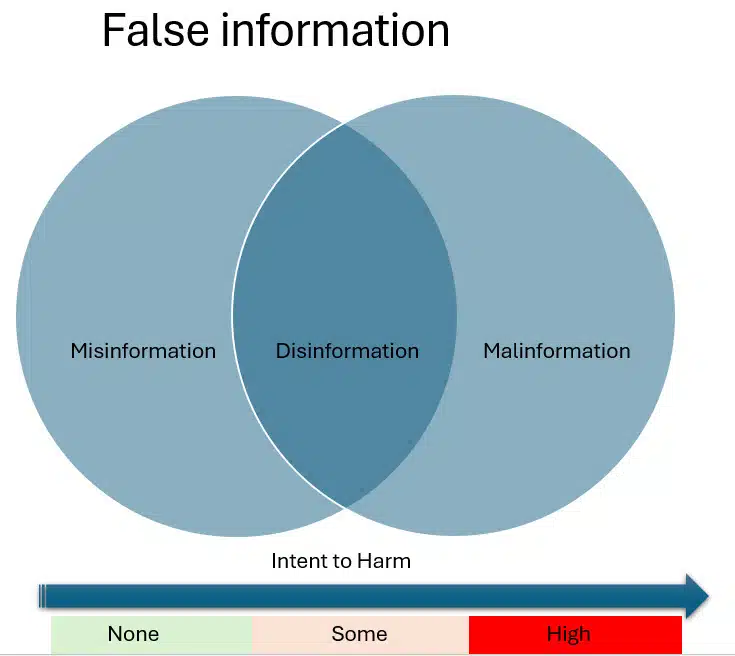

What is misinformation versus disinformation?

Definitions

We rely on the internet to find information on almost everything, but how much of this information is accurate?

Misinformation is inaccurate information posted in error.

Disinformation is inaccurate information deliberately posted to mislead so as to influence thoughts and actions.

Malinformation is information that is true and posted to influence opinions and or actions, usually by taking it out of context. The posting of a photo with a misleading caption. The misuse of private info – Phishing. There are many examples in political communication.

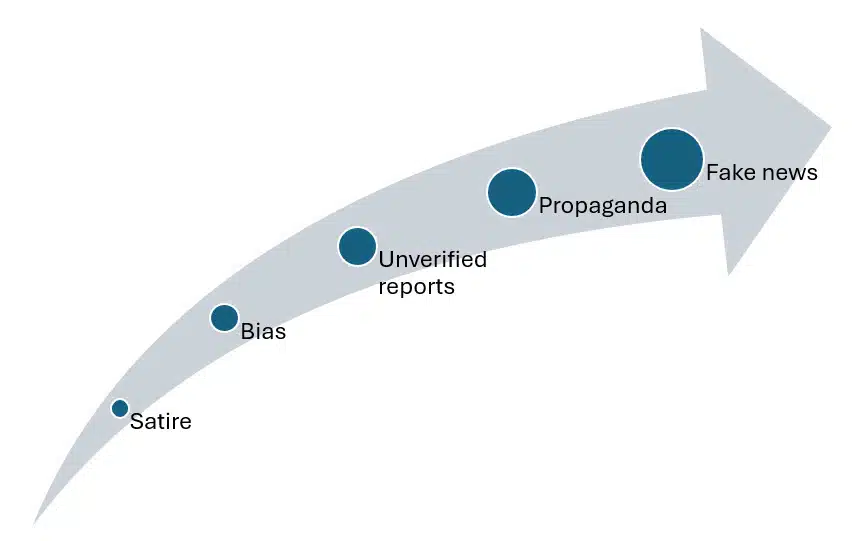

Degrees of misinformation

Satire and parody can be classified as misinformation – but there is no intent to harm – it is assumed the audience understands the information is distorted for entertainment.

Then there are biased communications – for example media articles and books written from a particular perspective, with no mention of other viewpoints, are misleading. They are essentially misinformation. Likewise material that provides no context can also be misleading.

Unverified reports – Many reports appear in the main and social media with no verification – no reference to the source of the information. This is true of some product claims, as well as some research and stories that are in fact rumor. In the extreme there are conspiracy theories. Depending on the intent, these reports can be misinformation or disinformation. Many who pass on such information are unaware of its potential falseness.

Propaganda is a deliberate attempt to strongly push a particular point of view – either with no reference to opposing views, or by claiming they are completely false. This is disinformation

Fake news is by definition deliberate falsification – disinformation. It is intended to influence and manipulate the opinion of its audience.

It includes clickbait – sensational headlines to attract attention. Misuse of maps, charts, data tables, photos and other graphics, false attribution of authorship or source of information are also used. More recently, outright fabrications of images and information have appeared and entire fake websites.

The rolke of AI? Generative AI infortunately has a tendency to produce false information by itself – termed AI hallucination.

Prevalence & Consequences

Disinformation has always been around but the internet has made its creation and distribution exponentially faster and easier.

The majority – over 60% of the world population is online. The total amount of content has exploded. A huge percentage of this content is shared selectively, with bias – fuelled by algorithms designed to maximise user engagement for financial gain (advertising income), or for political purposes. As a result, the reality that the internet audience perceives is invariably skewed.

Misinformation and disinformation have significant effects on society and individuals. At an individual level it can cause loss of reputation, adverse effects on health and financial loss from hoaxes and scams. At the society level it can result in loss of confidence in public institutions and service, as well as impacting political agendas and elections.

GDI The Global Disinformation Index publishes research on disinformation by country, disinformation risk of news publishers and websites, and disinformation published on particular events and topics.

A 2023 Statista survey of adults in the US found that up to 66% reporting seeing false information on line daily. The older the respondent the more likely they were to report this.

How has misinformation and disinformation become a problem?

Political and commercial agendas

There are strong political and commercial incentives to distribute misleading content that promotes particular interests, and denigrates the competition.

The advert-funded model of much of the web facilitates and has boosted the creation of misleading information. In particular the automated advertising auctions reward sites with many views, advert impressions and clicks. This is an incentive to produce, often misleading, sensational content that will attract users – “clickbait” .

Impact of AI

Much of the enormous proliferation of content and disinformation on the web is due to Artificial Intelligence (AI). AI has provided ways to easily generate text and manipulate it, as well as to manipulate images and audio visual media. Using AI actors can create realistic media that are similar, but not identical to, original content.

On the distribution side AI is deployed by tech platforms to grow user engagement. This is because of their need to reach as many users as possible to support the advertising revenue they depend on.

These algorithms have contributed to the extensive sharing and dissemination of misinformation. The algorithms promote the more contentious and sensational content to increase user engagement. Platforms track user behaviour across the internet using ‘Cookies’ embedded in sites users visit, as well as the particular fingerprint of a user’s browser. This browsing history is used to deliver search results in line with the user’s previous searches and interests.

Because sensational content is more popular, a feedback loop is created – promoting the creation and consumption of even more such content. In this way the algorithms affect the results of search, as well as material that is presented passively on websites.

The deployment of bots (software robots) by those with agendas also contributes to the dissemination of disinformation. Bots operate on social media platforms posting and sharing content posing as human contributors. They use fully or semi -automated fake user accounts.

Strategies for creating disinformation

Got to the website https://www.getbadnews.com/en/intro to see the various techniques used to create disinformation

Passing oneself off as a credible news source – most people don’t check the listed source of information.

Using language that will evoke fear, anger or sympathy in the intended audience. For example, climate change and political content typically use the fear angle.

Playing on existing grievances that people have and making them seem more important than they really are.

Promoting the view that unexplained events are the fault of unseen organizations or groups. This tactic works when information on an event is very sparse.

An actor planting and promoting information that discredits opponents and competitors. This also diverts attention away from the fact they are themselves spreading misinformation.

These actors exist to interact with others individually – they attract engagement through posting on hot-button topics, they are usually anonymous, make outlandish claims, often flip flop on them and continue discussions interminably. Trolls share misleading posts and leave hundreds of comments a week.

Troll farms are groups of people who create fake online profiles and may engage with each other to create the illusion of widely shared opinions.

Why do people share disinformation

Studies of misinformation in social media have found that some factors predispose individuals and groups to share misinformation.

- Anxiety – those who are worried about the topic are more likely to share.

- Source ambiguity – when the source is clear it is more likely to be perceived as trustworthy and shared.

- Content ambiguity – content that is clear is more likely to be shared, especially when the person has

- Personal involvement

- Family and Social relationships – information received from friends and family is more likely to be passed on.

- Confirmation bias – as mentioned previously we tend to pass on information that we believe in, and that is appealing to us.

- Those with lower levels of literacy are more likely to pass on mis and disinformation.

- Information we receive on our devices is more trusted and passed on than information received in other formats.

Online information is passed on more often because it is so easy.

Why do people believe disinformation

When it comes to believing information, people are more likely to believe that received on their personal devices. Especially from friends and family, and from social and political groups they are associated with.

Perceived authority of other sources also influences acceptance. Repetition also promotes acceptance.

Information that accords with their existing beliefs is more readily accepted . Those with lower levels of literacy are less likely to question received information.

In crisis situations, such as the early days of Covid, people are more likely to accept unverified reports. This is because they have a strong need to understand the situation.

How to detect misinformnation and disinformation

Detection requires the ability to distinguish between true versus false material. This means

- fact checking – comparison with other material and thereby assessing its veracity

- assessing the intent of the material

- assessing the metadata – the sharer’s attributes and therefore trustworthiness

That’s very hard for the average person, although there are fact checking websites.

Can AI itself help?

The key questions

- is AI effective in detecting mis/disinformation?

- is it appropriate to use AI – since AI is itself responsible for much of the proliferation of misinformation and disinformation?

Detection of Disinformation through Language Analysis

Automated detection of false information requires language analysis.

The huge amount of information to be reviewed is itself a challenge –in large part due to the use of bots to deliberately create and distribute false information. Much of this information is unstructured – especially that in social media. This unstructured data has to be extracted into meaningful format using text mining methods. Sentences are split up and key words identified, ready for analysis.

Natural Language Processing (NLP) is automated analysis based on statistical frequency of word occurrences and relationships – proximity to other words.

Text is complex – the same message can be expressed in many different ways.

AI can identify simple sentences. When more complex text is involved it is has difficulty. It is unable to handle nuances due to the use of sarcasm and cultural variations in language.

Therefore classification by textual analysis programs using natural language processing algorithms is not very effective.

Machine learning programmes can learn from humans who can flag a piece of text as true or false. Then the program can review the characteristics of the text, find patterns and make classification decisions based on correlations. This semi supervised machine learning method can enhance accuracy.

Detection of Disinformation through Sentiment Analysis

Automated Fact-Checking for Disinformation Detection

In the context of disinformation, fact-checking refers to the process of verifying information to confirm the accuracy of purported fact-based statements.

When humans fact check information they look at the context as well as the words. There are many aspects of context – time frame, historical background, the individuals involved, locations, and many other factors that are related to events such as weather at the time.

Humans analyse the evidence, assess the reliability and validity of the source of the information, and cross check the claims with multiple other sources.

AI needs to be trained as to what is true versus false. This means training material must be provided, and humans have to provide guidance on this, at least initially.

Politifact and others have compiled databases of fact checks. Currently these are very limited in terms of the numbers of checks. whereas AI needs very large numbers of records (millions) to produce meaningful results.

A further challenge is that for effective training of algorithms such databases need to have balanced examples of both true and false information on a wide range of topics.

Deep fake audio- visual material can only be detected by comparison with the original material and a complete record of a person’s activity.

Theoretically by analyzing the sentiment associated with news or content, and determining whether there is emotional polarization, indications can be identified that the content is false.

Again humans are needed to train the AI system on what is true or false. The research has well established that the data used for training itself is inevitably biased. Humans providing guidance create more bias or amplify existing bias in the data.

How to counter disinformation

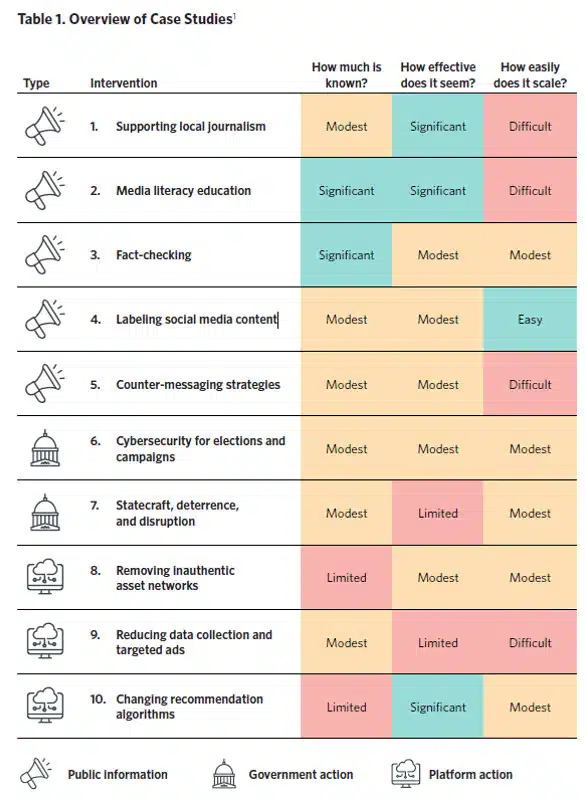

The 2024 report “Strategies to counter disinformation: An Evidence -Based Policy Guide” for the Carnegie Endowment Organisation by Jon Bateman and Dean Jackson lists the various methods that could be used, how much is known from research, how difficult each would be to implement and their effectiveness.

From this we can see that the easiest and most effective ways currently are to improve journalism, media literacy and the recommendation algorithms of the tech platforms.

Improve the quality of journalism

Many news media writers now provide opinion pieces from biased partisan perspectives, and the media itself tends to align with a preferred political agenda. This bias is not transparent.

Ideally journalism organizes information for the public in a way that promotes the public interest. It should be free from bias with sources of information clearly provided, and points of contention identified. The media should be a forum for the public to hold power to account. It should present news in a balanced way, without overblowing or under emphasizing threats and risks.

Stories should be based purely on the facts, supported by evidence. The language should be neutral – without emotive or positive/negative adjectives. Where there are two or more sides to a story, the report should cover all perspectives equally.

Improve code of practice at tech platforms

Some of the major tech platforms have a code of practice for detecting and managing false information. However, there is no access to their data and algorithms, and therefore no way of auditing it. Currently there seem to be no real protections for basic rights of individuals.

Change platform reliance on advertising revenue

Reducing the reliance of platforms on advertising would reduce the amount of sensational and false content designed to attract user attention, and thus advertising revenue. However introducing pay for service models would be very difficult, with ethical issues of its own.

Counter messaging

Three key strategies fall under this heading. Debunking and Innoculation (prebunking) and Nudging

Debunking is the correction of misinformation. It is most effective when the explanation includes – why it may be deemed correct, the incorrect information is highlighted, and the reasons why it is wrong are provided, together with the facts – the alternative explanation, and why it is correct.

However it may not convince those who hold strong views. Fact checking is labour intensive and the debunking of messages may not stay top of mind, so effects diminish over time.

Pre-bunking uses the psychological theory of Innoculation. The technique is to expose individuals to a false claim and counter arguments before they encounter the related disinformation. ” You may see reports that …. but be aware that this is not true because …”. The suggestion is that warning users of the possibility of misinformation will improve their awareness and judgment of misinformation generated by chatbots and other malign actors.

In accordance with innoculation theory – booster messages at intervals help maintain the effect. This strategy is thought more likely to be effective than debunking, and easier to apply at scale.

Nudges are prompts to consider the authenticity of information before sharing it. They can be specific, calling attention to accuracy and truth, as well as calling attention to community standards for sharing information. The limited research has shown mixed results to date.

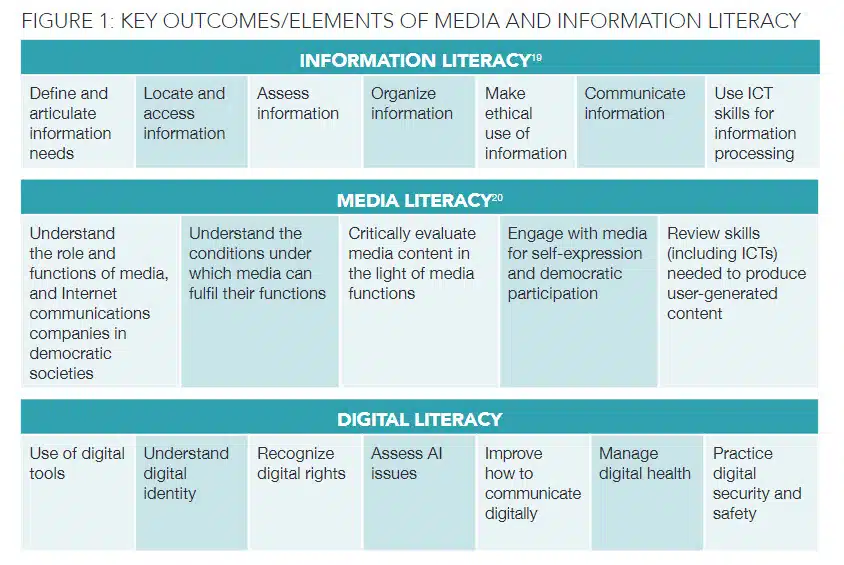

Improve media literacy

The Unesco Media Literacy Initiative has listed the key knowledge and skills people need in order to understand their information needs, and to locate, retrieve and evaluate the quality of the content they can access in a digital world.

Improve transparency regarding the origin of information

It is recommended that each piece of content should contain information on

- how it was produced

- sponsors if any

- where it is available

- the target audience

This would enable those who access the information to better assess its validity. Currently only some academic research studies provide this information.

Critical Thinking skills

For individuals and organizations Critical Thinking skills are now one of the most important competencies.

Critical thinking skills are used in Problem Solving and consist of;

- Interpretation,

- Analysis

- Reasoning

- Evaluation

References

1. Jon Bateman and Dean Jackson. 2024. Countering Disinformation Effectively: An Evidence-Based Policy Guide. Carnegie Endowment Organisation.

2. Noémi Bontridder and Yves Poullet. 2021. The role of artificial intelligence in disinformation Data & Policy 3: e32

Santos, Fátima C. Carrilho. 2023. Artificial Intelligence in Automated Detection of Disinformation: A Thematic Analysis.

Journalism and Media 4: 679–687.

3. Shahbaz, Funk, Brody, Vesteinsson, Baker, Grothe, Barak, Masinsin, Modi, Sutterlin eds. Freedom on the Net 2023, Freedom House, 2023, freedomonthenet.org.

4. Sadiq Muhammed T · Saji K. Mathew 2022. The disaster of misinformation: a review of research in social media. International Journal of Data Science and Analytics (2022) 13:271–285

5. Donghee Shina and Fokiya Akhtarb 2024 Algorithmic Inoculation Against Misinformation: How to Build Cognitive Immunity Against Misinformation JOURNAL OF BROADCASTING & ELECTRONIC MEDIA 2024, VOL. 68, NO. 2, 153–175

6. DAMIANO SPINA, MARK SANDERSON, DANIEL ANGUS, GIANLUCA DEMARTINI, DANA MCKAY, LAUREN L. SALING, AND RYEN W. WHITE 2023 Human-AI Cooperation to Tackle Misinformation and Polarization COMMUNICATIONS OF THE ACM | JULY 2023 | VOL. 66 | NO. 7

7. Wojciech Mazurczyk, Dongwon Lee, and Andreas Vlachos 2024 Disinformation 2.0 in the Age of AI: A Cybersecurity Perspective COMMUNICATIONS OF THE ACM Vol 67 (3)

8. Gretchen A Peck 2024 AI Changes Everything Editor & Publisher May 2024

9. Illia, L., Colleoni, E., & Zyglidopoulos, S. (2023). Ethical implications of text generation in the age of artificial intelligence. Business Ethics, the Environment & Responsibility, 32(1), 201–210

10. Fatemeh Torabi Asr and Maite Taboada 2018 Big Data and quality data for fake news and misinformation detection. Big Data & Society January–June 2019: 1–14